The field of computational hardware is changing quickly in the age of developing machine learning (ML) and artificial intelligence (AI). This transformation holds significant implications for DevOps professionals tasked with managing the intricacies of AI and ML applications. Let’s delve into the key insights and challenges posed by the rise of specialized hardware for AI and ML and its impact on DevOps practices.

Specialized Hardware Revolution

Traditionally, general-purpose hardware like GPUs and CPUs sufficed for various workloads. However, the surge in AI and ML demands has necessitated specialized hardware tailored to specific tasks. This shift is driven by the need for enhanced efficiency and speed, especially in scenarios where traditional hardware hits performance limitations.

Competition and Innovation

While NVIDIA has historically dominated the AI chip market, competition is intensifying with the emergence of innovative alternatives. Major tech companies like Google and Amazon are investing in custom AI chip development, challenging established players and driving innovation. Additionally, startups are entering the fray with fresh perspectives on AI hardware solutions.

DevOps and Hardware Diversity

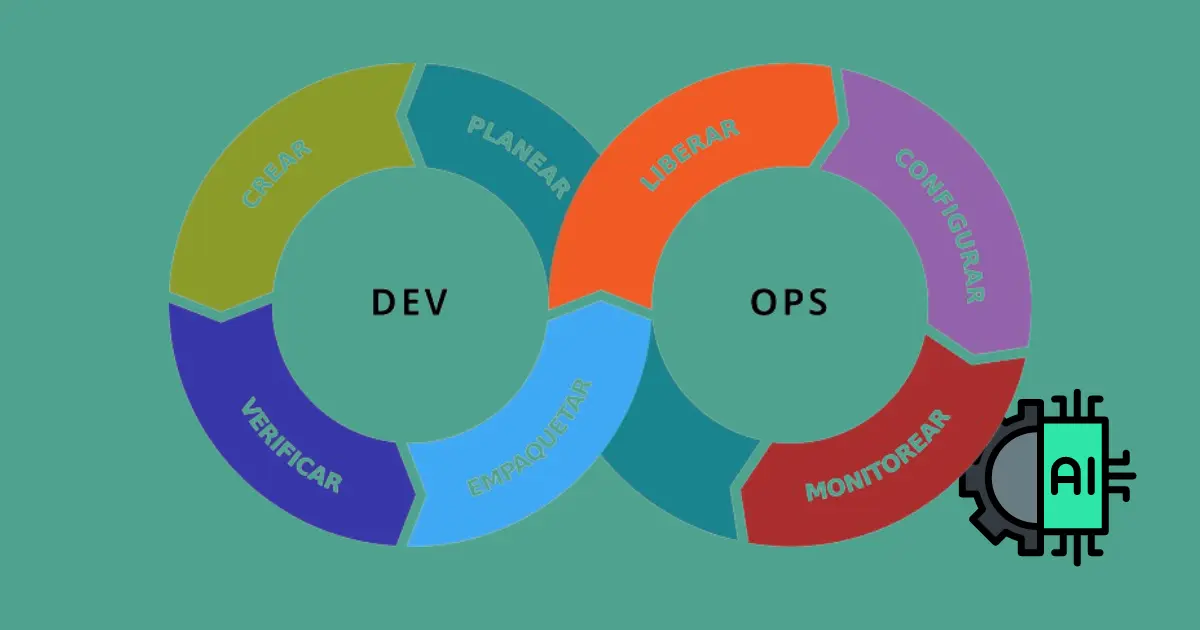

The proliferation of specialized hardware presents new challenges for DevOps teams, particularly concerning performance portability. Ensuring that applications run efficiently across diverse computing architectures requires careful management. Moreover, the complexity of continuous integration and deployment pipelines is amplified, necessitating thorough testing and validation across multiple hardware configurations.

Performance Portability Solutions

DevOps teams must embrace strategies to enhance performance portability in the face of evolving hardware landscapes. Leveraging containerization, benchmarking, profiling, and code portability libraries can facilitate seamless adaptation to diverse hardware configurations. Agile methodologies also play a crucial role in fostering flexibility and adaptability in fast-paced technological environments.

Adapting to AI Hardware Evolution

As AI and ML continue to advance, DevOps teams must prioritize adaptability and explore emerging technologies to thrive in this dynamic landscape. Containerization and agile methodologies will remain indispensable tools in ensuring application consistency and performance across diverse platforms. Proactive exploration and integration of scalable and efficient technologies will be key to success in navigating the evolving AI hardware ecosystem.

In conclusion, the evolution of specialized hardware for AI and ML presents both opportunities and challenges for DevOps professionals. By adopting adaptive strategies and embracing innovative technologies, DevOps teams can effectively navigate the complexities of this dynamic landscape and drive success in AI-driven environments.